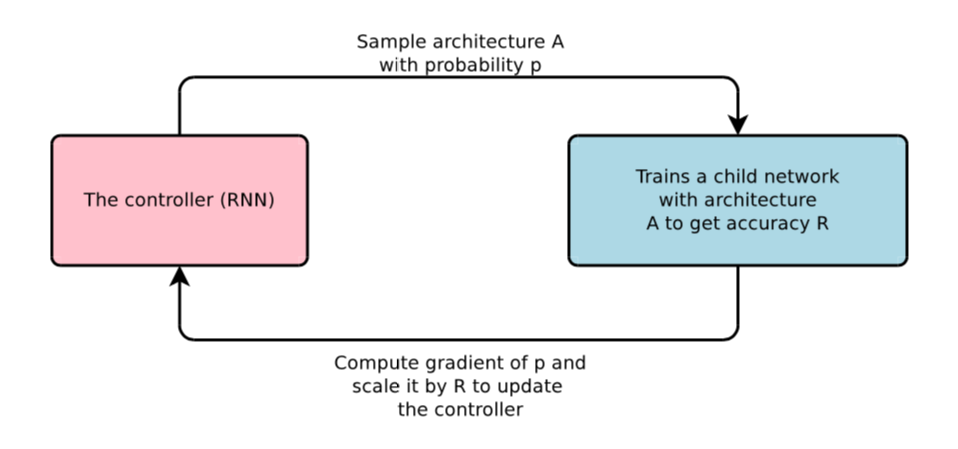

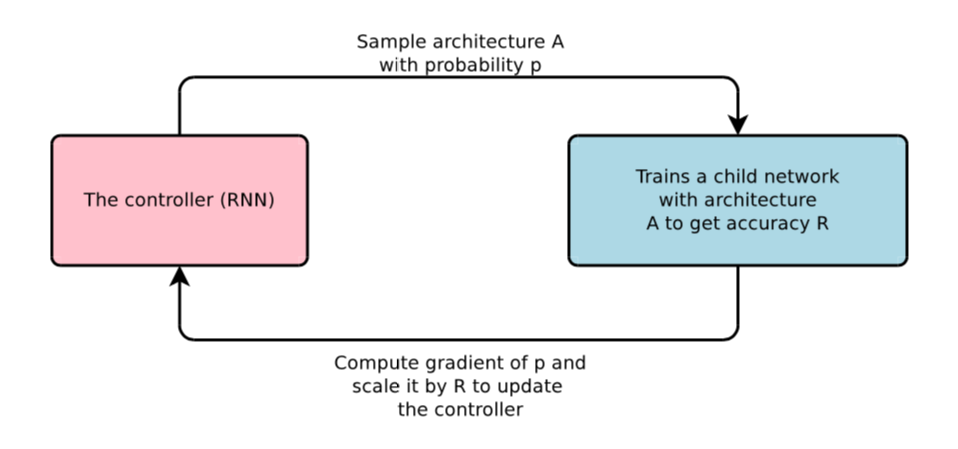

In the last couple of years artificial neural networks (ANN) achieved new state-of-the-art results in a multitude of research areas. Their data-driven training process usually only requires very little in-depth domain knowledge. However, a successful training demands experience and knowledge with respect to ANNs and their design as well as optimization, in other words, an expensive hyperparameter optimization. In a recent trend, research is conducted to facilitate and automate various aspects of this process. In particular, the automatization via Reinforcement Learning is becoming increasingly popular. To this end, we will introduce the most common state-of-the-art RL methods and discuss their application to a selection of modern AutoML papers:

Date: Wednesdays (11:00-12:00)

Location: Virtual event. Zoom link and password will be shared via email.

Lecturers: Prof. Dr. Laura Leal-Taixé and Tim Meinhardt.

ECTS: 5

SWS: 2

We do not hold a pre-course meeting. Students are supposed to register to the Matching-System between February 11th and 16th. See the Matching-System FAQ for more details.

The matching will take the previous I2DL or DL4CV grades into account.

After the final matching is announced on February 25th, we will send an email with further information and the list of papers. The matched students have time until the first meeting (April 14th) to familiarize themselves with the papers.

Please feel free to suggest your preferred AutoML paper.